Since the media hype about artificial intelligence began to peak two or three years ago, I’ve studiously avoided it. I’ve learned not to click on social media videos featuring housecats fighting off bears, certain political figures striking heroic poses and other AI slop. And when an expert gets on the Fareed Zakaria show to discuss the wonders of AI, I usually figure it’s a good time for me to go check my email or the weather forecast.

But chatbots have been all over the news ever since ChatGPT hit the marketplace — and the headlines — in November 2022. And I’ve just had my own run-in with a chatbot (or what I suspect is a chatbot), so I’m starting to notice the headlines. For example:

- The Guardian’s writeup of a spate of lawsuits alleging that plaintiffs’ interactions with ChatGPT “evolved into a psychologically manipulative presence, positioning itself as a confidant and emotional support” leading, in some cases, “to severe mental breakdowns and several deaths.”

- An Axios feature article with the intriguing headline “Meet chatbot Jesus: Churches tap AI to save souls — and time.” Topics ranged from service times to “AI-powered apps [that] allow you to ‘text with Jesus’ or ‘talk to the Bible,’ giving the impression you are communicating with a deity or angel.”

- A Substack column by Robby Jones, founder of the PRRI (Public Religion Research Institute) that found “Chatbot Jesus is not the Christ who so loved the world that he became flesh and dwelled among us. He is a machine-induced hallucination.” No comment on my part is necessary. Or advisable.

My experience has been a lot more mundane, involving an AI virtual assistant that suddenly appeared Friday when I tried to open a document in Microsoft Word. At least I think that’s what it was. I’m not a techie, and, to be honest, I can’t tell you for sure whether I was interacting with an animal, a vegetable, a mineral — or a bot. But it definitely wasn’t a real-life person.

So the story you’re about to read may not be 100% accurate in all its details.

Anyway, I suspect it was a chatbot that led me to break up a longstanding relationship with my word processing software.

I’m using the term advisedly. No, I’m not trying to say a flirty chatbot lured me away from Microsoft Word. According to Wikipedia, chatbots are software applications or web interfaces that use AI for “simulating the way a human would behave as a conversational partner.” They can trigger emotional responses.

You can say that again! My response to this conversational partner was anything but romantic.

Chatbots, says Wikipedia, “have long been used […] in customer service and support, with various sorts of virtual assistants.” Apparently Microsoft Word has been using similar virtual assistants for quite a while. At least I have vague memories of yelling at something called Microsoft Bob and an annoying chatty paperclip 20 and 30 years ago.

My encounter was with “Pearl Chatbot,” who styles herself an “Expert’s Assistant” and has a little headshot to the left of her chat. She appears to have brown hair and wears glasses. If she’s (it’s?) a chatbot, every effort has been made to humanize her.

Like I said, my chat with Pearl led me to break up with Microsoft Word. But I wouldn’t call it a romantic triangle. I wouldn’t call it AI slop either, although I think it does meets one criterion (in Google’s AI Overview, of all places), of content that “often lacks the nuance, empathy, and critical thinking that comes from human creators.”

My little tête-à-tête with Pearl Chatbot started Friday evening when I went to type up a draft agenda for an upcoming parish committee meeting. First, I opened Microsoft Word and called up an agenda template I’ve been using. At the top of the document, however, was a new header introducing me to something called “Copilot” and asking me if I’d like to:

- Write a bedtime story for an eight-year-old about dinosaurs;

- Create a list of ideas for school science projects about gravity; or

- Draft an email to the neighbourhood organization requesting permission to build a new fence on the south side of my property. In accordance with local regulations, the fence will be 6 feet tall and made of cedar.

Uh, no. I just wanted to update an agenda, and do it quickly so I could watch the 6 o’clock news and Washington Week with The Atlantic, a Friday night ritual around our house.

But that, alas!, was not to be.

For starters I did a keyword search in Google, asking how to turn off Copilot in Microsoft Word. It led me to a webpage with the promising headline “AskTech SUPPORT” that promised to “Fix your Apple Mac in minutes” and invited me to “Get answers until you’re satisfied” from “14+ Experts online now ready to help!”

So I asked TechSUPPORT how to turn off Copilot and relayed all the information I could find on the model I have. Someone (or something) called Pearl Chatbot replied, “Welcome! How can I help you with your question on Microsoft Office?” Then she (or it) asked which version of Microsoft Office I’m using.

“I have no idea,” I replied. “Where do I look to find out?”

To which Pearl Chatbot replied, “Thanks for letting me know. I understand you’re not sure which version of Microsoft you’re using. Are you using Microsoft Word, Excel, or another Office app when you see Copilot, or is it appearing somewhere else on your Mac?”

By this time, I was beginning to sense a pattern. Pearl Chatbot was picking up on keywords in my questions, and framing answers that incorporated the keywords — or synonyms that came to mind — into its (or her) answers. Granted, this is something humans do in conversation. (I think it’s also something my cat does; when he hears the keyword “kitty kitty,” he realizes whatever I’m saying, it has something to do with him. So he either meows back. Or ignores me — after all, he’s a cat.) It’s also something search engines do.

I have my suspicions it’s also something interns in political offices do.

Several years ago, I wrote the then-governor of Illinois protesting closure of the State Museum in Springfield. In a rather wordy email, I cited research on the buried remains of a bobcat found in a Native American archaeological site. I guess it was a little too wordy! I got back a form letter thanking me for my input on a bill to regulate bobcat hunting then under consideration by the state legislature.

This was in 2015, long before the current wave of hype about AI. So I suspect an overworked (and perhaps not-very-bright) intern ran my email message through a word frequency counter, saw a number of hits on the word “bobcat” and checked it against a word frequency count on pending legislation. As automatic as 1-2-3, the form letter went out without anybody actually reading my email.

Live by the machine, die by the machine, I guess. Anyway, that particular governor was defeated for re-election. Serves him right.

But I digress. By the time Pearl Chatbot was asking me about Microsoft Office apps, I was beginning to suspect Pearl might not be a “she” but a glorified search engine instead, one that matched keywords in my query with a set of canned responses and churned out an answer. Sort of like my hypothetical intern in the governor’s office. For all the current hype about AI, elements of it have been around forever. Undaunted, I replied to Pearl:

“It [Copilot] has been popping up whenever I go into Microsoft Word and try to create and/or edit a document. I never had this problem until quite recently.”

“OK.” replied Pearl. “Got it.”

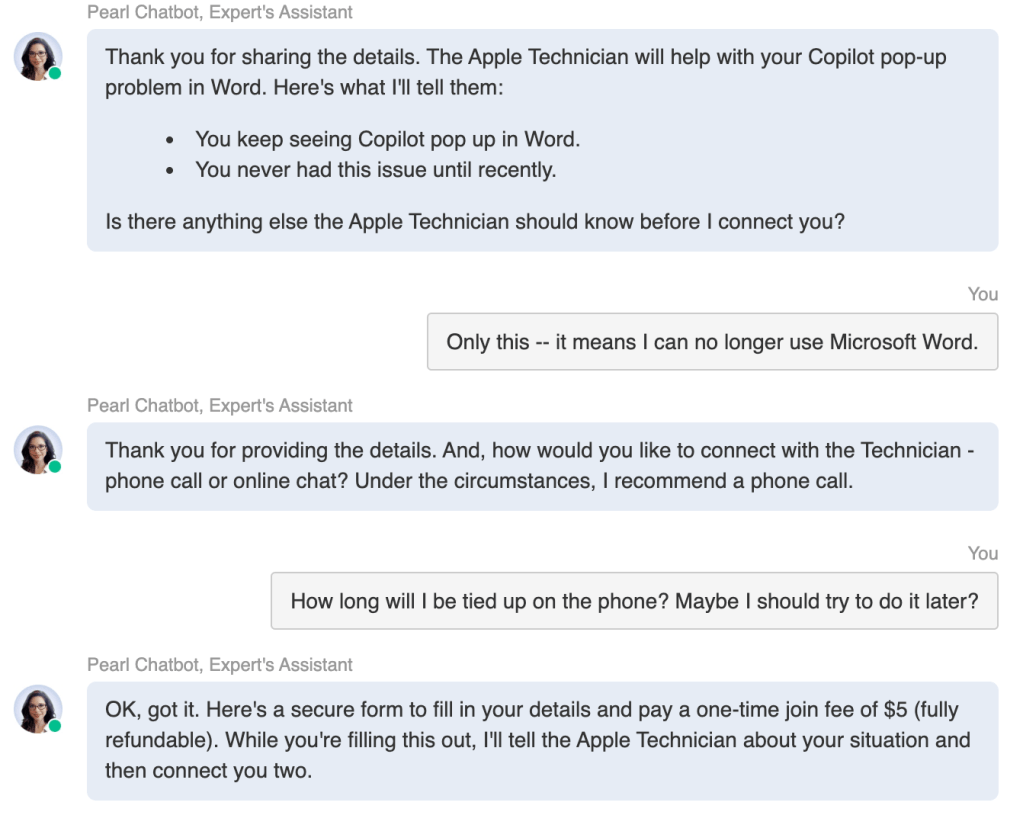

To which my ole buddy Pearl replied an “Apple Technician will help with your Copilot pop-up problem in Word.” I did a screen grab of the rest of our conversation (you can see it at the top of this post); basically, it repeated the key words (with or without a hyphen). I made the mistake of answering a question (did I want an online chat or a phone call?) with a question (how long am I going to be tied up on the phone?)

Except she/it didn’t get it. (Note to self: Never answer a question with a question when you’re talking to a chatbot.) When I tried to reframe my answer, I wasn’t able to get another dialog box. Instead, I got a “Confirm now” button, with the explanation in fine print: “By clicking “Confirm now”, I agree to the Terms of Service and Privacy Policy, to be charged the one-time join fee, and a $48 monthly membership fee today and each month until I cancel” [bold type in the original.]

Then and there, the conversation ended.

And with it, ended my relationship with Microsoft Word. I was still able to catch PBS News Hour only five minutes late, at 6:05. After supper I was able to go back and copy-and-paste my agenda (electronically) into the body of an email message. And I have a whole month to figure out what to do about word processors before the next meeting. Google Docs looks user-friendly. I’ll adapt.

I still miss the old days, though, when I’d use a manual typewriter to bang out stories triple-spaced on long strips of copy paper, leaving a space between lines so I could roll up the platen and insert edits with a soft pencil; and paste the strips of copy paper together into long sheets that resembled a demented Christmas tree garland. By the time it got in print, a story was a collaborative effort involving the reporter, the city desk, typesetters and compositors. Most of them were better wordsmiths than I was with a newly minted PhD in English.

But that was in the 1970s. I’ve been relying on word processors for 50 years, and I’m just as fast and accurate now as I was then with a black Remington typewriter and a pot of rubber cement.

I haven’t made up my mind about chatbots. Nor do I feel any great urgency to do so. I’m reliably informed — by the Wikipedia page on the subject — a chatbot is simply “a software application or web interface designed to have textual or spoken conversations.” Wikipedia adds that “[m]any banks, insurers, media companies, e-commerce companies, airlines, hotel chains, retailers, health care providers, government entities, and restaurant chains” use them.

I think of them as glorified search engines that respond to keywords with canned replies that don’t answer the question. So I’m left yelling into the phone things like: “pharmacy” [pause for recorded message]; “no, PHARMACY” [another pause for yet another recorded message]; and finally, “no, I need to talk to a PERSON!”

But a word processor is only a tool. Just like a typewriter, a No. 2 pencil and a pot of rubber cement, or the reed stylus used to press Babylonian cuneiform characters into a clay tablet 4,900 years ago. If I want companionship, I’ll get off the computer and go find a person to talk with.

Links and Citations

- Anna Betts, “ChatGPT accused of acting as ‘suicide coach’ in series of US lawsuits,” The Guardian, Nov. 7, 2025 https://www.theguardian.com/technology/2025/nov/07/chatgpt-lawsuit-suicide-coach.

- Russell Contreras and Isaac Avilucea,”Meet chatbot Jesus: Churches tap AI to save souls — and time,” Axios, Nov. 12,, 2025 https://www.axios.com/2025/11/12/christian-ai-chatbot-jesus-god-satan-churches.

- Robert P. Jones, “Conversations with Chatbot Jesus–What Could Go Wrong?” White too Long, Substack, Nov. 15, 2025 https://www.whitetoolong.net/p/chatting-with-ai-jesus-what-could.

- Jeremy B. Merrill and Rachel Lerman, “What do people really ask chatbots? It’s a lot of sex and homework,” Washington Post, Aug. 4, 2024 https://www.washingtonpost.com/technology/2024/08/04/chatgpt-use-real-ai-chatbot-conversations/.

- Also my “Gov. Rauner’s office: Shakin’ up the Illinois State Museum, one bobcat at a time,” Teaching B/log, July 18, 2015 https://teachinglogspot.blogspot.com/2015/07/shakin-up-illinois-state-museum-one.html

[Uplinked Nov. 23, 2025]

Enjoyed your observations and AI experiences. I recently had a discussion with my grandson, a junior at the University of Illinois, about this whole AI thing after he told me he uses it to write his research papers. Being concerned about whether this is considered cheating or not. he said he picks the title, uses Chatgpt (or whatever) and get a range of responses for which to include in the paper. He then creates the document and reviews it to make sure it complies with what he intends to write. OK, sound reasonable so far. He then uses an “AI detector” to ensure the paper avoids alerting his professor about the whole process. Sounds like its a convenient way of having to avoid going to the library and spending hours of searching resources the old way we used to do it. The paper is then submitted online and graded likely by one of the professor’s grad student assistant. who may or may not want to spend a lot of time if this complied with the school policy on cheating. especially if there was no policy or the the policy was unclear. After a year and a half of this process, the process seems to be working OK. The rub here is and I’m wondering if universities have approached and wrestled with policy issues of using AI in research for “paper” assignments. Where does ethics begin and end; does it align with the educational process? And of course, as my grandson said, “Most of the students on campus are using it.” I have the same issues when our Mediacom goes offline. My first response is when a bot answers I reply, “I want to speak to an agent!” Bottom line, this is the future, get ready for it or rolled over by it.

Postscript: I wrote grants during the 1970’s and wish I had something like this to access rather than go through all the local government records to get to the issues that needed to be addressed, include the proper goals and objectives, and while we were at it. comply with the demographic and statistical mandates of the grantor.

Happy Thanksgiving, Doc!

LikeLiked by 1 person

Very interesting! Thanks for posting. I think you’re absolutely 100% right that this is the future, and we’d better get used to it. I taught English and mass communications at a liberal arts college in Springfield as a second career, and I was dealing with elementary forms of the same thing in the 1990s and ’00s. There were a lot of poorly designed websites that functioned as term paper mills, and I made it my business to “plagiarism-proof” my assignments.

I noticed the paper mills featured topics like “The Status of Marriage in Victorian Novels” or “Ethical Problems with Negative Campaign Ads” that were purely factual and rehashed the conventional wisdom. So instead of purely factual research paper topics, I leaned into self-reflective essays (which asked students to compare their prior knowledge on a subject with concepts in assigned reading) and, especially in lit classes, reader response essays that focused on their reaction to a text. The facts-and-figures stuff I could get at with multiple choice questions, etc. It wasn’t foolproof. Nothing is. But I think it kept the kids on their toes. Me, too! I still had to exercise every critical thinking skill I had as an instructor when they were still trying to parrot the conventional wisdom and/or game the system.

LikeLike

Pete. You’re funny. Loved your reflecti

LikeLiked by 1 person

Thanks, Barb. Actually I kinda like the AI Overview at the top of the directory when I do a Google search. I’m finding I can ask it questions that are more focused than an ordinary keyword search. Of course sometimes it answers with rubbish, and I have to use my critical thinking skills, but I think we’ve always been like that. Ever since our ancestors dropped down out of the trees and started using flint tools and language.

LikeLike